In mid-September 2025, Google quietly removed the URL parameter &num=100. This small change didn’t affect rankings but shook the SEO world because it limited how much data tools could collect at once.

Years ago, this trick was used to get 100 results on a single page as opposed to 10 results by the SEO tools. The relocation by Google is viewed as an attempt to ease the load on the server, prevent the extensive data scraping by other businesses, and shift the industry to authorized tools, including Google Search Console (GSC). The shift has resulted in a 10-fold increase in the price of the SEO tools, as they currently require 10 distinct requests to retrieve the 100 results.

This article looks at how tools are reacting, what challenges they face, and what SEOs should keep an eye on. So, let’s begin!

Contents

The Real Effect on SEO Data

Ending &num=100 has redefined how SEO data is collected. It marks the beginning of a new era focused on cleaner, more accurate data.

Visibility Metrics Distorted

Most of the SEO tools rely on deep ranking information to estimate the number of keywords and visibility rates. Tools now experience an abrupt decline in the ranking of a keyword and site visibility without being able to access the results going past the first page. The reality is: there has been no change in traffic; it is only the method of data collection that is different.

Rank Trackers Affected

Rank tracking tools are the most affected:

- 10x Cost Increase: Previously, a single query returned the best 100 results. It is currently a 10-query operation, which makes operations 10 times more expensive. The tools either need to increase prices or reduce the amount of data they monitor.

- Less Precise Data: Pagination will create delays and inconsistencies. Consequently, rank snapshots are not so good, particularly after the 20 results.

- Delayed Reporting: It is now more difficult to gather the same volume of data in a shorter time. This implies that ranking the changes on Google takes a longer time to show in the SEO dashboards, decreasing the responsiveness of the SEO teams.

Business Impact

Beyond the data in the tool, the deletion also caused what Google Search Console (GSC) metrics calls a Great Decoupling, which is a beneficial long-term adjustment:

- Impressions Dropped: A user (or bot) will only count an impression on a page on which the search result is visible. Since many SEO and AI bots were using &num=100 to load 100-result pages, they were artificially increasing impressions of results in positions 11-100. These fake, robot-generated impressions vanished with the parameter, and reported GSC impressions dropped sharply, especially on desktop.

- Mean Position Also Went Up: When all the overstated values of low-ranking positions (e.g., position 87) were eliminated, the mean position of most sites and queries automatically shifted to a more realistic place where the human mind actually perceives the site. This improved average position measure now would be very close to the actual user behavior of either clicking or scrolling to the first or second page of results.

- CTR Increased: The overall Click-Through Rate (CTR) for many sites unexpectedly increased, offering a cleaner, more realistic view of content performance as Clicks remained stable while Impressions dropped dramatically.

Businesses should, therefore, understand that this change is a measurement reset, not a genuine traffic slump, and it leads to a much cleaner signal-to-noise ratio in GSC data.

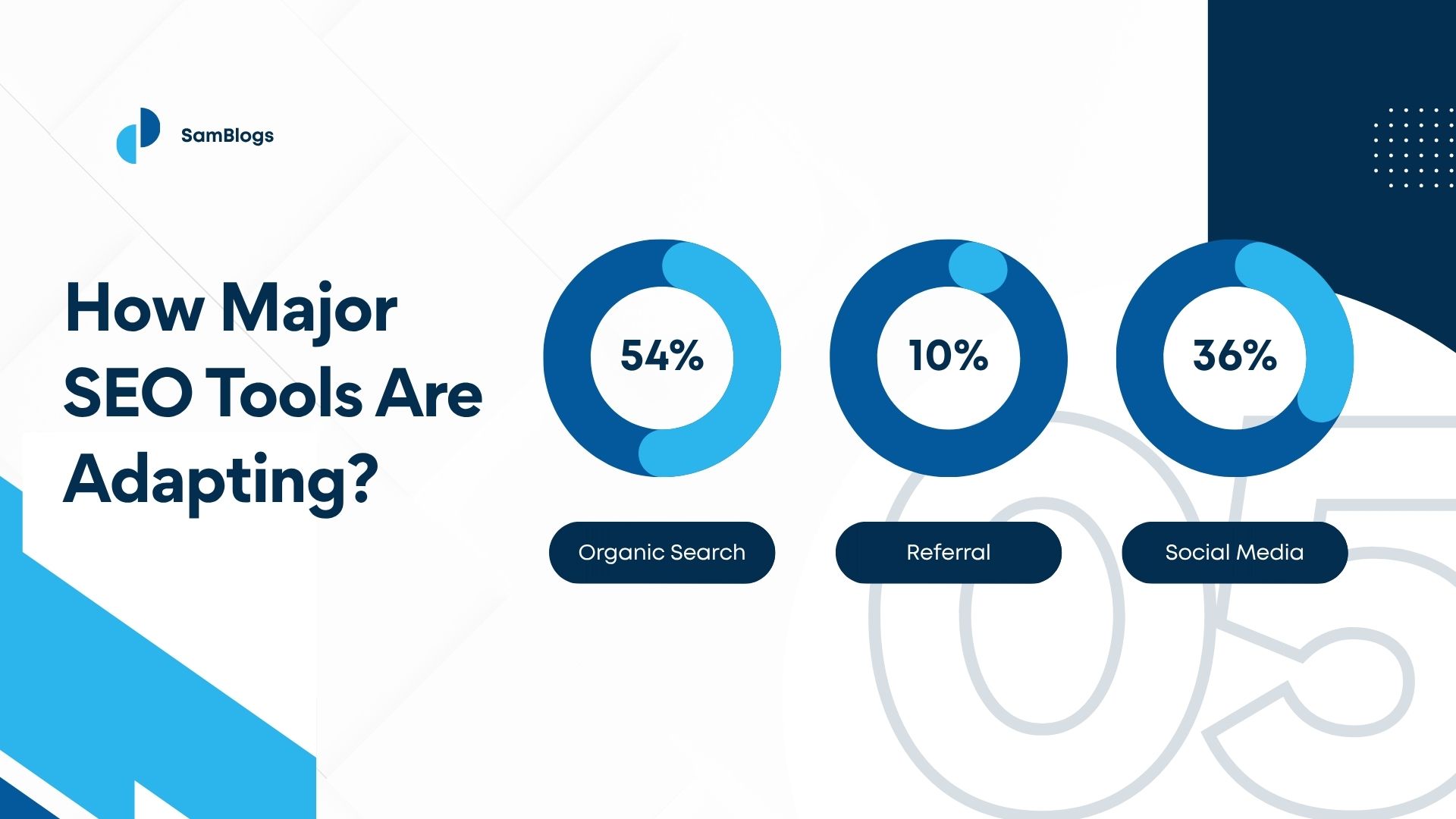

How Major SEO Tools Are Adapting?

Primary SEO tools have rapidly identified the disruption and are rolling out new strategies to safeguard data integrity and mitigate the high operational costs that have resulted.

They are commonly moving away from bulk scraping, which is more cost-efficient, toward more detailed, multi-request fetching and a renewed focus on top-of-funnel, high-value rankings.

Semrush

Semrush took action to calm the fears of its customers by stating that its essential services are still working. They announced that their platform still shows the Top 10 as well as the Top 20 positions, meaning that they are closely monitoring the most critical “striking distance” keywords (positions 11-20).

This indicates that they have switched to the multi-request pagination method for at least the first two SERP pages, and thus, the data critical for their users’ strategies is preserved. Semrush utilized its robust infrastructure to make swift and intelligent adjustments, thereby minimizing disruptions to clients and prioritizing the most commercially relevant data points. Their progressive aspect of the move focused on taking the responsibility for long-term data stability by revising data aggregation practices inside the company.

Ahrefs

They also announced that the changes had an impact on the entire industry. Initially, their reports indicated that their third-party data providers had temporary access to only first-page results (positions 1-10), which caused a temporary visibility reduction of positions 11 and beyond. This situation called for a strategic decision on what to prioritize.

Ahrefs is committed to following the developments to make solutions to minimize the workflow disruption, which will probably be the result of the refining of scraping and data aggregation methodologies to bring about the restoration of deep coverage, as far as it is cost-effective.

Deep-SERP coverage was temporarily reduced for the platform, and this was the immediate technical dependence on the parameter. Therefore, a strategic pivot to relying more on their existing extensive keyword index data was necessary.

Zutrix

Even though the nature of public updates from each tool varies, Zutrix would have found the same problem of cost and data depth as others. The adjustment that they, as a rank-tracking specialist, made most likely included:

- More API calls: Instead of one, ten separate requests could be made to scan the top 100 SERP, which would significantly increase cloud computing and anti-bot circumvention costs. To counterbalance the increase in expenses, many dedicated trackers are using innovative caching strategies where only the deep SERPs are scanned again thoroughly when top-of-page ranks for related keywords change.

- Tiered Tracking: Deep, daily tracking can be prioritized for premium or enterprise clients, while the frequency and depth of tracking for basic plans can be adjusted to manage the costs. This strategic, tiered approach effectively passed the increased data cost burden onto clients requiring the most granular, deep-page competitive intelligence.

- Feature Integration: Rather than continuing as a stand-alone product, the developers put new commitment into incorporating the Local Pack and SERP Feature tracking, which has different data retrieval mechanisms into their platform to maintain overall value.

AccuRanker & Others

AccuRanker, a well-known rank tracker, was among the first to deliver a comprehensive update. They announced a change to their default behavior, which now focuses on and efficiently tracks the Top 30 daily and the Top 100 only twice a month. This enables daily tactical insight while maintaining a strategic view of the entire competitive landscape.

There are some tools like SISTRIX that, after some time, didn’t just face some delays in their desktop SERP data updates but actually stopped these updates completely. They have focused more on the mobile index and valuable SERP features to control their expenditure resulting from desktop scraping.

Common Fix Approaches

Typical workarounds SEO tools use include:

- Pagination & Cost Management: Rather than one request, 10 paginated requests are used to represent positions 1–100. Although 10 times the cost is made, the scan frequency is reduced (e.g., weekly/bi-weekly) to keep subscription prices stable.

- Prioritization: Most organic traffic studies have led to the conclusion that the Top 20–30 results are the most productive. As a result, tracking depth is usually limited only to these positions. Only a few examples are deeply scanned.

- Alternative Data Sources: With reduced SERP scraping, tools supplement data with clickstream inputs and stronger GSC API integrations, effectively becoming data aggregators blending first-party and proprietary data.

What SEOs Should Do Right Now?

The first thing that SEO practitioners must do is not to panic, but to verify that their performance is as good as the metrics indicate and communicate it clearly and honestly to everyone who is concerned.

Validate With Google Search Console

The absolutely crucial step is to test all data from third-party tools using Google Search Console (GSC). GSC data is the source of the truth because it is reported directly by Google.

- Check Clicks and CTR: If the number of your Impressions has dropped but the number of Clicks and the Click-Through Rate (CTR) have remained the same, your traffic and rankings are almost certainly intact. Stable Clicks in GSC, confirmed by stable Sessions in Google Analytics 4 (GA4), is the final proof that no actual traffic was lost.

- Annotate the Change: Clearly mark the date in GSC and all internal reporting dashboards as mid-September 2025 when the impression and average position data changed. Such an annotation acts as a barrier that stops future historical analyses from misinterpreting the large discontinuity in impression data.

- Focus on Desktop vs. Mobile: Ensure that the most significant drop in impressions occurs on desktop, the channel most impacted by scraping bots; thus, the change is technical, not performance-driven.

Cross-Tool Verification

Don’t put all your trust in one SEO tool’s visibility score, which only you understand. Combine Google Analytics 4 (GA4) with the help of GSC for traffic/conversion data and impressions/position data, and test any rank drop reported by rank trackers.

If a tool indicates that a decline has occurred, then you should definitely verify it with another; the truth will be based on actual user behavior metrics. Integrating multiple tools and cross-validation into weekly reporting can continuously strengthen data resilience.

Adjust Reporting

The moment client and stakeholder reporting should be changed immediately is when you no longer prioritize impressions and average positions as the headline KPI. Additionally, you highlight the metrics that reflect user value.

- Organic Clicks/Sessions: This metric shows the number of visitors who came to the website. Since it is not affected by the &num=100 technical change, it is the most accurate measure of a successful top-of-funnel performance.

- Conversions/Revenue: The ultimate business outcome. Tracking conversions not only measures sales but also evaluates how effectively traffic is being turned into tangible results. It provides insights into campaign efficiency and helps prioritize high-impact strategies for maximum ROI.

- Top 10/Top 20 Rankings: Focus on the range of positions that really attract the most traffic. Instead of “the total number of tracked keywords,” change to “the performance of high-value keywords.”

Educate Clients & Teams

Clear and consistent communication is the basis of everything. Be closer to your clients and internal teams than they are to themselves, and inform them that the change in the data reported is a technical fix by Google to get rid of bot-inflated metrics, and it is not a decrease in their website’s performance or ranking health.

Share the confirmation of this by highlighting the stability in clicks and traffic. Develop a brief, single-page FAQ or ‘Data Shift Explainer’ to provide a quick response to the most frequently asked questions and to relieve any tool-dashboard-related suspicion.

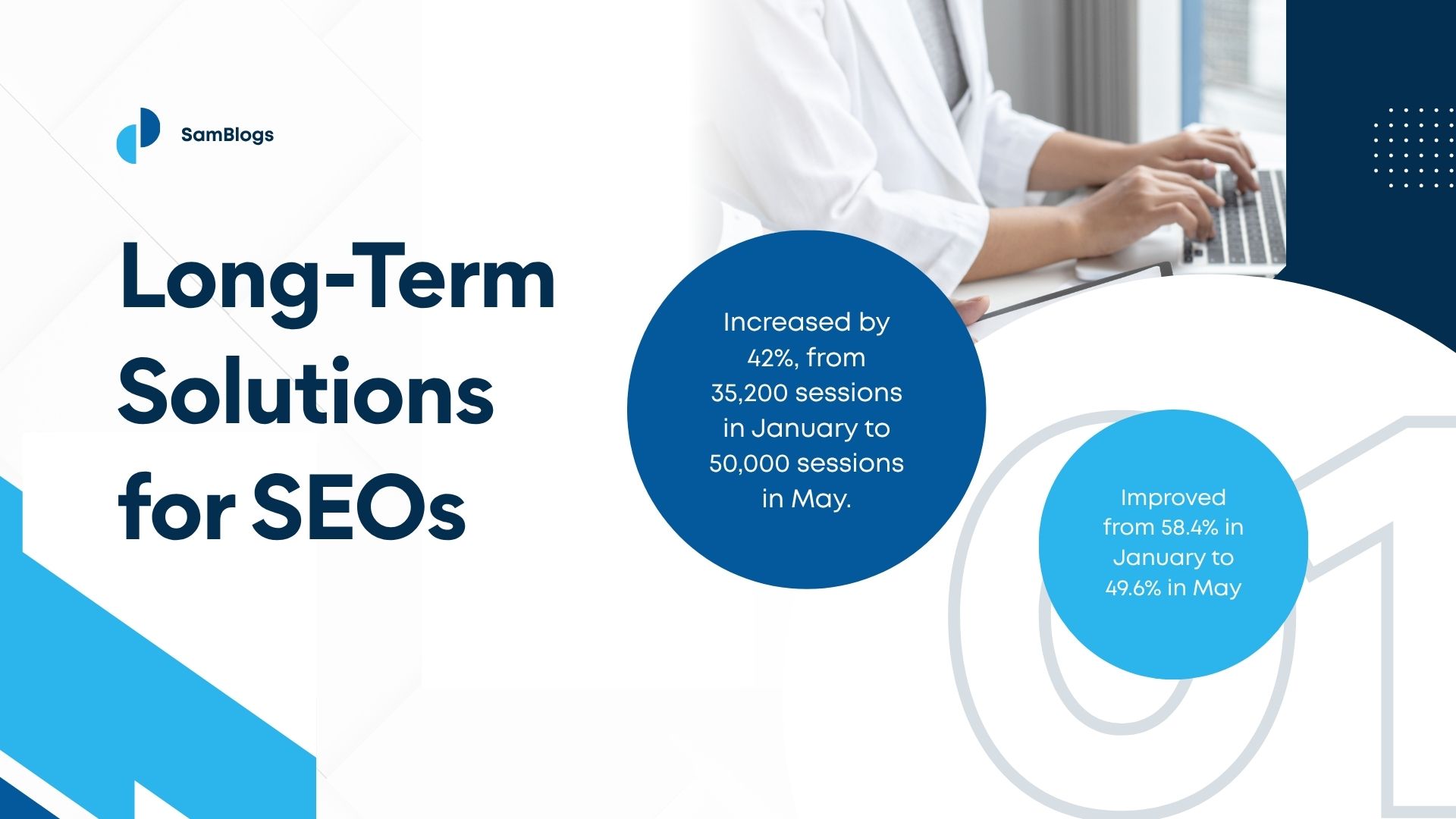

Long-Term Solutions for SEOs

The removal of the #100 is a haunting reminder that it is impossible to rely on a single third-party tool to do our SEO work.

The practice of the long-term must start with in-house research, more metrics, and an approach that is immune to the changes in the platform.

Diversify Metrics

The dependence on the Visibility Score type synthetic metrics, which were extremely sensitive to change, must be reduced. Success over the long haul means focusing on Generative Engine Optimization (GEO) and on-site metrics:

- Generative AI Visibility: The visibility of the content can be enhanced by referencing it in AI Overviews and chatbots, typically through highly structured, Q&A-style content, and ensuring that the entity-based authority is strong. The primary source of tracking for inclusion and position within AI Overviews, as the core visibility metric, is now most commonly used among SEOs, utilizing special third-party SERP APIs for this new feature.

- Engagement Metrics: Time on Page, Bounce Rate, and Conversion Rate – these are the few that reveal the actual user value and are not influenced by changes in the scraping of the SERP. These numbers indicate the quality of the content and the degree to which customer satisfaction is achieved, which are becoming increasingly important as ranking factors, regardless of the ease of data access.

- Core Web Vitals and UX: Make technical SEO performance the most important KPI, as Google directly rewards better user experience with higher placement.

Adopt Smarter Tracking

Rather than being caught up in the monotonous chore of monitoring over 100 different positions for thousands of keywords excessively, a SEO manager should focus their time on:

- Rethinking Keyword Lists: Drastically narrow down ranking tracking lists to focus mainly on money keywords and your nearest competitors’ keywords (positions 11-30) that may realistically have a chance to be on the first page. This rationalization not only saves a lot of money on tools but also ensures that the remaining budget is spent on the most actionable data, which helps to make the right decisions.

- Utilizing the GSC API: Use the GSC API for large-scale impression and position analysis, and consider it the main, cost-free source for broad visibility insights. This is then supported by more expensive, targeted rank checks from third-party tools. Building internal dashboards that directly link to the GSC API results in a strong ‘source of truth’ for impression and query data.

Expect Future Changes

The Google move is a sign of a broader change: it constantly restricts third-party access to the raw SERP data and favors its own data channels. SEOs need to be prepared for the future scenario in which access to deep-page rankings is either very expensive or totally inaccessible.

The industry will probably see the gradual implementation of scraping restrictions as Google tightens its security against LLMs and AI platforms that use the data, a strategy that very few analysts refer to as “data gatekeeping.” The focus has to be on creating user experiences that are far better than those of the competition, and thus cannot be ignored by search engines, rather than on scraping search results.

Conclusion

The end of the &num=100 parameter is more than a mere technical change; it is a turning point for the SEO industry. Without the influence of impression data inflation caused by scraping, a more transparent and user-centric view of Google Search Console performance is achievable.

The switch means severe problems for SEO tool providers who will face high operational and cost issues. As a result, they will have to devote most of their resources to the top-ranking results only. On the other hand, the practitioners get a very clear message: stop relying on vanity metrics and scraped data,which are not reliable.

Rather, give your attention to the things that really matter, such as clicks, conversions, and a content strategy based on quality. In the end, the real SEO growth is not in the chase after exaggerated numbers but in the involvement of actual users.